So that’s a wrap! Another trimester at SAE complete and what a great one it has been. This course is really moving along at a rapid speed, and now that the end is in sight, the excitement builds to having a qualification in an area of passion. The tasks we’ve learnt this trimester, particularly relating to surround sound and mastering have been really beneficial to my learning. I genuinely enjoyed creating a 5.1 surround sound mix (the more speakers the better right?) and learning how to master is going to become very useful now for both my music and other productions in the future.

Firstly I’d like to make a really important observation before we step into the projects I have worked on for this Trimester, and that is recognising how far I have actually come this year in terms of personal development. When I actually have a read back to my post-mortem blog from my first studio unit back Trimester 3 with Steve Callan, it’s cool to reflect on that and observe where I am now and my achievements this year, particularly in music production and now film scoring.

So let’s now dive into the projects I have been involved with for Trimester 5. Upon reflecting on my studio units this year, I did an electronic EP in Tri 3 with Matt, then an electronic EP on my own in Tri 4, so I thought to mix it up a bit I could record a band this time in the studio. However, in the meantime I had some great ideas for some electronic music as well. So, I did both! Keeping in mind I did have the option to drop something if it all got too much to handle.

So as per my scope document I decided to record a band that I actually play in myself. It’s a four piece acoustic setting consisting of flute, guitar, piano and vocals. We performed recently at the Royal Perth Yacht Club for a 70th birthday party, lol, but it was fun! I really liked the sound we had during rehearsals and so I asked the group into the studio to record a bunch of classic cover songs as listed below:

- Scarborough Fair

- Dreams

- With A Little Help From My Friends

- Big Spender

- It’s My Party

- Money, Money

- My Cherie Amour

- Say A Little Prayer

Despite running out of time towards the end of the Trimester to fully mix and process these tracks appropriately for my project, it was great to get everyone into the studio and have that experience of setting up all the equipment, practicing suitable microphone choice and placement techniques, getting signal into the C75 console and attempting to achieve the best sound possible, which was obviously the whole idea of the project. So in reflection, despite not having enough time to complete this EP as planned in the scope document, it was still a completely beneficial practise of recording live instrumentation in a studio environment and was a great experience. Recording bands in not my forte, nor do I enjoy it any where near as much as writing music, but I recognise that it is a very important skill to have, especially for recording live instruments to overdub into my electronic music and film scoring.

Towards the end of this eight hour session I had Kat (one of the vocalists) and a very good friend of mine (by the way this group is my church band I play with weekly on Sundays) stay back and record the vocals with me for a song she had written named ‘Soul Calling’. Basically she had given me the piano accompaniment that she recorded at home, and we used that as a backing track to record the vocal lead line. She had not written this song for me to produce initially, it was something that was later established when Kat said to me, “I think this track needs something a little more electronically in order to bring it to life!” I was certainly in the same frame of mind (as I usually am with Kat) and it was a collaboration that came together just beautifully in the end.

We were both extremely happy with the final result of the song. Kat being classically trained vocally and also in her self-taught piano style, the track originally had that classical edge to it, but I could hear the drive that it needed in the bass line. So the task of bringing it to life so to speak, was not only challenging but extremely rewarding in the end. I really had a lot of fun playing the role of producer and co-writer for the final result.

This track then became an extra track on the 3 piece electronic EP for the second half of my major project. To then have Kat with me for the final mix down in the mastering suite was awesome, even making a final edit to a couple of the sub bass notes in protools. To work with a singer-songwriter in this way (and also a good friend of mine) and knowing that Kat is completely on the same page as me musically, is a dream come true and only the beginning of an exciting future of music production for both of us. We look forward to writing more music together.

‘Soul Calling’ is very much a journey when you actually sit down and listen to the whole 6 minute track from start to finish. Yes, it is long. But it’s long for a reason. It’s a journey for Kat, lyrically and melodically and the lyrics reflect this clearly. As the approached producer for the track, I didn’t want to stray away from her original arrangement at all if I didn’t have to. We have spoken of various remixes and radio edits of the track in the future, even re-recording the vocals in a more ‘house’ style as Kat suggested. So it will be fun to see what else we come up with here.

So aside from that incredible addition to the electronic part of my project, I wrote three original tracks, each from a different genre of music. I wanted to not only express my eclectic taste in music, but also my ability to produce in various styles that I really enjoy.

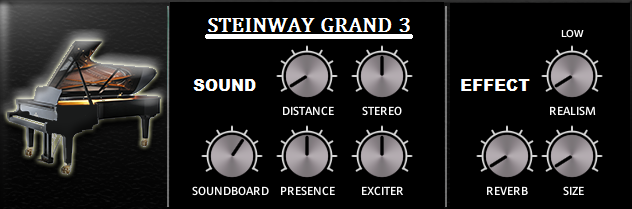

The first track ‘Expand’ began with an awesome stepping chord progression that I played around with on a rainy day. The bass line then followed and eventually became the core element of the track, driving the piece into a piano melody that seemed to just fit perfectly to me. Add some piano melodic harmonies and establish the arrangement and bam, you have yourself a basic EDM track. I think what makes this track for me is the chord progression, that descends and ascends again over a 16 bar loop. These kinds of progressions come to me when I’m just experimenting with what sounds nice on the piano.

The second track on the EP ‘Lax’, well what can I say, I think I actually had a tear the day I finished the basic arrangement of this track. It might sound lame, but this major/minor chord progression and triplet jazzy-filled melody and groovy percussion, I’ve said to people is purely me and my soul talking. Once I got the drum rhythm right and the two chord progressions bouncing back and forth, the melodies just came together, inspired by a lot of chill-out house music I used to listen to throughout my 20’s. Cocktails and balmy sunsets by the beach is what this track represents for me and I think one of the vibes I certainly enjoy creating musically. I look forward to writing more music in this style too.

The final track on the EP is an acoustic piano and vocal piece ‘Exit’ that I wrote over the trimester about a relationship I was in many years ago and had to get out of in order to move on with my life and find out who I really was. Deep right? The lyrics don’t particularly make sense if you follow them but I used words that came from the heart that fit to the melodic phrases I had initially written when I established the chord progression. This was a lot of fun to write, I love acoustic music and this song is sort of a journey for me I guess.

Technically speaking, the Neumann U87 was beautiful in the end and as Brandon confirmed a few weeks back, a great microphone choice for my voice. Recorded a few takes of harmonies too, which I then added into the final mix. Oh and not to mention getting my hands on a Waves Audio Tuner that was on special recently, which helps a great deal in fine tuning some vocal notes. However I’ve noticed you can easily go overboard and your vocal can begin to sound unnatural as you can slightly hear in some notes during this song. More time to shape it up better is definitely on the cards.

So that’s all my personal projects covered. There is one more rather large project that I have not mentioned as yet and this was a project that was offered to us as a class earlier in the Trimester, the audio treatment for Tri 3 film, Pablo. This was my first experience at working on a film project however it was a brilliant experience and good practise for our Tri 6 major project in which we are working on a short film as a class. Pablo is an indigenous film that required ethnic percussion in the film score pretty much throughout the film.

Personally I was a bit excited about this film as I do love African and Ethnic percussion. I find it’s extremely moving and powerful stuff. So I’ll admit it was a lot of fun working on the film score for Pablo, along with fellow student Danielle Carlow. I wrote about 4 of the scenes and Dani wrote 2 in the end. Having not scored before I was constantly checking with Jarvis the film director as to whether or not the music I was writing was creating the right feel for the scene, which was a priority for me. So when Jarvis explained that I had nailed it, I was so happy.

Again it might sound lame, but at the showcase I had a bit of an emotional moment watching Pablo on the big screen with a packed theatre of students and family members. It was a proud and special moment of having the audience subconsciously connect with the film via the scoring I had written. To see it all come together and to feel the audience connecting with the film in a certain way because of not only the story but particularly the scoring, was a moment of realisation that this is indeed my passion.

This, what was happening in that very moment, contributing largely to the emotion that is created with music attached to a visual medium. That’s what I’ve now realised I’m all about and it really was a wonderful experience to be involved with this film. To have a special thank you from lecturer Andy Hill in front of everyone to Tri 5 students Danielle Carlow and Ben Pfeiffer who wrote the original score, was a very proud moment and an awesome feeling indeed. I look forward to more film scoring and writing of music in the future and particularly for our upcoming short film ‘Unheard’ for CIU330 next Trimester. We have been in pre-production for this film during CIU212 this Trimester and I’m really looking forward to the end result of this final major project for the degree.